Virtual Netvisor Deployment Example

Inband Virtual Netvisor (vNV) Deployment

Overview

Typically used in conjunction with UNUM, Virtual Netvisor (vNV) deploys as a seed switch.

vNV is used to reduce the impact of UNUM polling on physical switches installs on the same UNUM ESXi server.

This Use Case example demonstrates how to connect vNV through Inband communication.

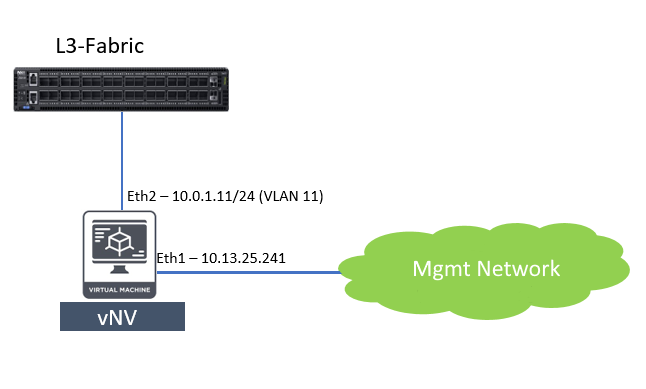

Topology

As illustrated in the topology example shown above, there are two ways to connect vNV to the fabric through inband.

- vNV uses VLAN 1 to communicate to the Fabric inband network.

- vNV uses a separate Network and uses routing to communicate with the Fabric inband network.

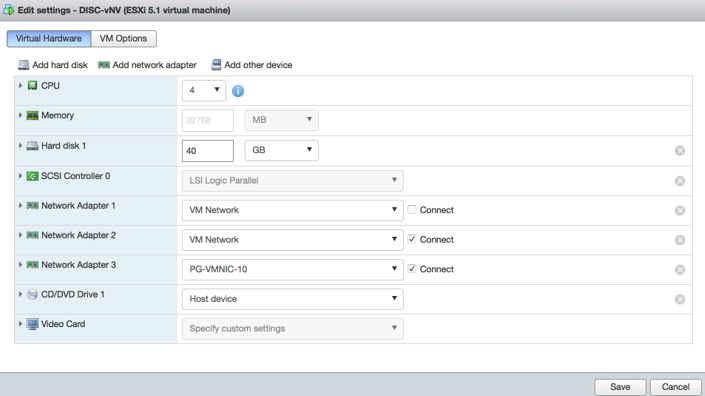

Virtual Machine (VM) Properties

A vNV has three interfaces, eth0, eth1, and eth2.

In the VM, these are identified as “Network Adapter 1”, “Network Adapter 2”, and “Network Adapter 3” respectively

Eth0 (Network Adapter 1) is not connected in the VM configuration.

Eth1 (Network Adapter 2) and eth2 (Network Adapter 3) are for management and inband communication.

The following ESXi Virtual Hardware settings example illustrates the network adapter configuration.

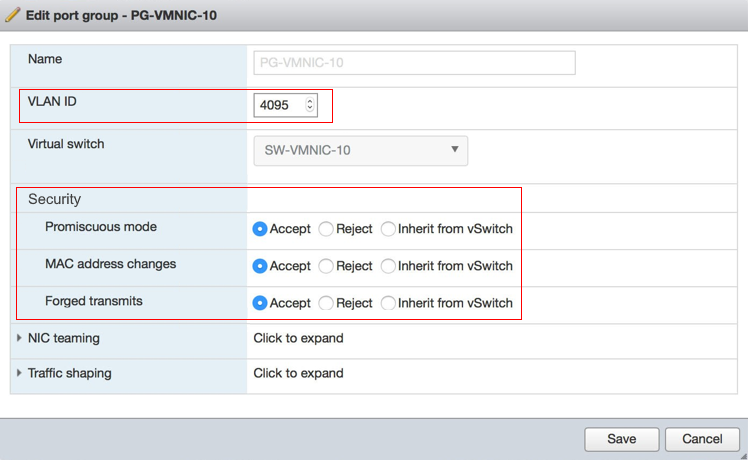

Port Group Configuration

Set the following Port Groups (PG) properties associated with the VM interfaces “Network Adapter 2 and “Network Adapter 3.”

VLAN ID to - 4095

Security Promiscuous mode – Accept

Security MAC address Changes – Accept

Security Forged Transmits - Accept

The following ESXi Edit Port Group settings example illustrates the PG security configuration.

vNV Configuration

vNV needs to be configured such that it can communicate to inband IPs of other switches in the Fabric and also vNV’s in-band network is reachable from the rest of the Fabric. There are two ways to connect vNV to the Fabric through inband:

- vNV uses VLAN 1 to communicate to Fabric inband network

- vNV uses a separate network and routing to communicate with the Fabric inband network.

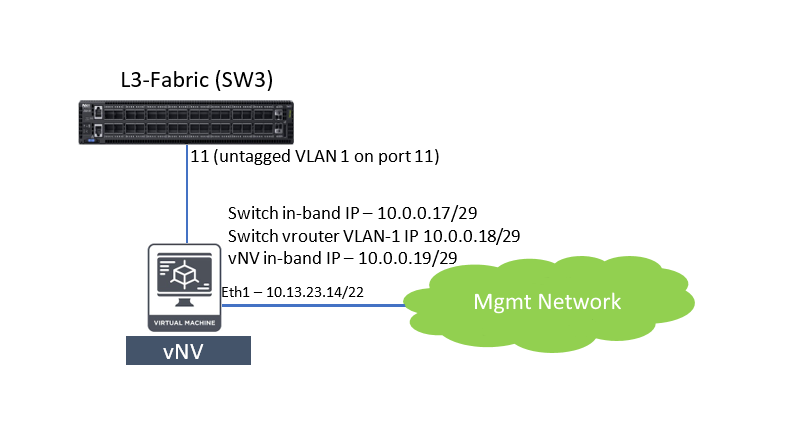

vNV uses VLAN 1 to communicate with the Fabric Inband Network.

Refer to the following topology used in this scenario. In this example, vNV is connected directly to one of the switches in the Fabric (SW3).

This switch has an in-band IP of 10.0.0.17/29 and a vrouter interface IP of 10.0.0.18/29 in VLAN-1.

- SW3 is configured with an in-band IP of: 10.0.0.17/29

- SW3 is configured with a vrouter interface IP of 10.0.0.18/29 for VLAN-1: vrouter-interface-add vrouter-name vrouter-SW3 ip 10.0.0.18/29 vlan 1

- SW3 has following switch-route created for inband communication: switch-route-create network 10.0.0.0/24 gateway-ip 10.0.0.18

- VLAN 1 is now configured as untagged on Port 11 of SW3. Run the command: port-vlan-show ports 11

CLI (network-admin@SW3*) > port-vlan-show ports 11

|

port |

vlans |

untagged-vlan |

description |

active-vlans |

|

---- |

----- |

------------- |

----------- |

------------ |

|

11 |

1 |

1 |

|

1 |

- vNV running on VM has an in-band IP of 10.0.0.19/29

- vNV has following switch-route created for inband communication: switch-route-create network 10.0.0.0/24 gateway-ip 10.0.0.18

- With this configuration vNV should be able to ping ping in-band IPs of other switches in the fabric.

CLI (network-admin@vNV-UNUM) > ping 10.0.0.17 PING 10.0.0.17 (10.0.0.17) 56(84) bytes of data.

64 bytes from 10.0.0.17: icmp_seq=1 ttl=64 time=5.63 ms

64 bytes from 10.0.0.17: icmp_seq=3 ttl=64 time=1.10 ms

^C

--- 10.0.0.17 ping statistics ---

6 packets transmitted, 5 received, 16% packet loss, time 5019ms rtt min/avg/max/mdev = 1.103/2.038/5.639/1.800 ms

ping: Fabric required. Please use fabric-create/join/show CLI (network-admin@vNV-UNUM) >

- Now vNV should be able to join the fabric (in this example: test_case):

CLI (network-admin@vNV-UNUM) > fabric-join switch-ip 10.0.0.17 Joined fabric test_case. Restarting nvOS...

Connected to Switch vNV-UNUM; nvOS Identifier:0x4db3f218; Ver: 5.2.0- 5020015650

CLI (network-admin@vNV-UNUM) >

CLI (network-admin@vNV-UNUM) > fabric-node-show format name,fab-name,mgmt- ip,in-band-ip,state,

|

name |

fab-name |

mgmt-ip |

in-band-ip |

state |

|

-------- |

--------- |

-------------- |

------------ |

------ |

|

vNV-UNUM |

test_case |

10.13.23.14/23 |

10.0.0.19/29 |

online |

|

SW3 |

test_case |

10.13.22.221/23 |

10.0.0.17/29 |

online |

|

SW1 |

test_case |

10.13.22.220/23 |

10.0.0.1/30 |

online |

|

SW4 |

test_case |

10.13.20.221/23 |

10.0.0.13/30 |

online |

|

SW2 |

test_case |

10.13.20.220/23 |

10.0.0.5/30 |

online |

CLI (network-admin@vNV-UNUM) >

vNV uses a Separate Network and Routing to Communicate to the Fabric Inband Network

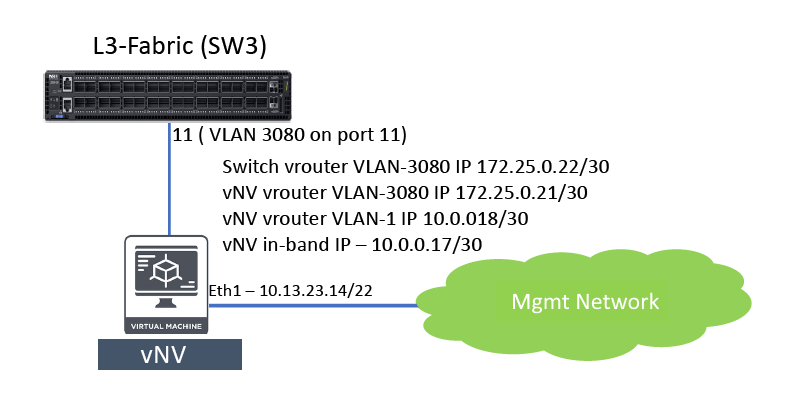

Refer to the following topology used in this scenario. In this example, use VLAN 3080 to link vNV to the fabric.

Note: The vNV Inband IP is in a different, /30, subnet.

Following steps are needed to accomplish this integration

- Configure VLAN 3080 on port 11: vlan-create id 3080 scope local ports 11

CLI (network-admin@SW3*) > port-vlan-show ports 11 port vlans description active-vlans

|

port |

vlans |

description |

active-vlans |

|

---- |

----- |

----------- |

------------ |

|

11 |

3080 |

|

none |

- Create a vrouter interface for VLAN 3080 on Switch SW3: vrouter-interface-add vrouter-name vrouter-SW3 ip 172.25.0.22/30 vlan 3080

- Make sure correct in-band IP, 10.0.0.17/30, is configured on vNV during initial setup.

- Create a vrouter on vNV: vrouter-create name vNV-UNUM fabric-comm

- Create VLAN 3080 on vNV: vlan-create id 3080 scope local ports all

- Create a vrouter interface in VLAN 1 for in-band network to reach rest of the fabric on vNV: vrouter-interface-add vrouter-name vNV-UNUM ip 10.0.0.18/30 vlan 1

- Create a vrouter interface in VLAN 3080 for vNV to communicate to SW3: vrouter-interface-add vrouter-name vNV-UNUM ip 172.25.0.21/30 vlan 3080

Now vNV should be able to VLAN 3080 interface of SW3.

CLI (network-admin@vNV-UNUM) > vrouter-ping vrouter-name vNV-UNUM host-ip 172.25.0.22

PING 172.25.0.22 (172.25.0.22) 56(84) bytes of data.

64 bytes from 172.25.0.22: icmp_seq=1 ttl=64 time=3.91 ms

64 bytes from 172.25.0.22: icmp_seq=2 ttl=64 time=1.18 ms

^C

--- 172.25.0.22 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 1.185/2.548/3.912/1.364 ms

vrouter-ping: Fabric required. Please use fabric-create/join/show CLI (network-admin@vNV-UNUM) >

- Create a switch-route on vNV to reach in-band network of other witches in the fabric: switch-route-create network 10.0.0.0/24 gateway-ip 10.0.0.18

- Vrouter default route on vNV pointing SW3 VLAN 3080 vrouter interface: vrouter-static-route-add vrouter-name vNV-UNUM network 0.0.0.0 netmask 0.0.0.0 gateway-ip 172.25.0.22

- Vrouter static route on SW3 to reach vNV in-band networking pointing to vNV VLAN 3080 vrouter interface: vrouter-static-route-add vrouter-name vrouter-SW3 network 10.0.0.16/30 gateway-ip 172.25.0.21

Note: Configure the SW3 vrouter-ospf to redistribute static.

- At this point vNV should be able to ping in-band IPs of switches in the fabric.

CLI (network-admin@vNV-UNUM) > ping 10.0.0.9 PING 10.0.0.9 (10.0.0.9) 56(84) bytes of data.

64 bytes from 10.0.0.9: icmp_seq=1 ttl=62 time=2.33 ms

64 bytes from 10.0.0.9: icmp_seq=2 ttl=62 time=1.23 ms

^C

--- 10.0.0.9 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.237/1.786/2.335/0.549 ms

ping: Fabric required. Please use fabric-create/join/show CLI (network-admin@vNV-UNUM) >

- vNV should be to join the fabric now (in this example: test_case).

CLI (network-admin@vNV-UNUM) > fabric-join switch-ip 10.0.0.9 Joined fabric test_case. Restarting nvOS...

Connected to Switch vNV-UNUM; nvOS Identifier:0x4db3f218; Ver: 5.2.0- 5020015650

CLI (network-admin@vNV-UNUM) > fabric-node-show format name,fab-name,mgmt- ip,in-band-ip,state,

|

name |

fab-name |

mgmt-ip |

in-band-ip |

state |

|

-------- |

--------- |

-------------- |

------------ |

------ |

|

vNV-UNUM |

test_case |

10.13.23.14/23 |

10.0.0.17/30 |

online |

|

SW3 |

test_case |

10.13.22.221/23 |

10.0.0.9/30 |

online |

|

SW1 |

test_case |

10.13.22.220/23 |

10.0.0.1/30 |

online |

|

SW4 |

test_case |

10.13.20.221/23 |

10.0.0.13/30 |

online |

|

SW2 |

test_case |

10.13.20.220/23 |

10.0.0.5/30 |

online |

Switch Configuration Tweaks to Connect to UNUM

- Disable web admin service:

admin-service-modify if mgmt no-web

admin-service-modify if data no-web

- Tweak cos3 buffer limit to 500

Redirect analytic traffic to an unused cos queue (in this example cos3) and set the rate limit to prevent it from overrunning CPU: port-cos-rate-setting-modify port control-port cos3-rate 500

- Enable analytics, if disabled and redirect to cos queue: connection-stats-settings-modify enable

debug-nvOS set-level flow

vflow-system-modify name System-S flow-class class3 vflow-system-modify name System-F flow-class class3 vflow-system-modify name System-R flow-class class3 debug-nvOS unset-level flow

- Repeat above steps on all switches in the fabric.

- Enable web admin service on vNV: admin-service-modify if mgmt web

- Disable analytics on vNV: connection-stats-settings-modify disable

At this point, vNV is ready to act as a seed switch for UNUM to collect analytics.