Configuring Kubernetes Visibility through vPort Table and Connection Table

The Container Network Interface (CNI) is a proposed standard for configuring network interfaces for Linux application containers. A CNI plugin is responsible for inserting a network interface into the container network namespace. Kubernetes uses CNI as an interface between network providers and Kubernetes Pod networking. CNI plugins can implement their network either using encapsulated network model (overlay) such as VXLAN or an unencapsulated network model (underlay) such as BGP. Also, each plugin can operate in different modes to implement either overlay or underlay network types. For more information on Kubernetes networking and CNI plugins, see Kubernetes cluster networking documentation.

Netvisor ONE version 7.0.0 uses the information from the Kubernetes client to detect the CNI configuration on the Kubernetes cluster to build vPort table and connection table for Pod to Pod traffic analytics. This feature currently supports only Flannel and Calico as CNI plugins. For more information on vPorts, see Understanding Fabric Status Updates, vPorts and Keepalives.

Use the k8s-connection-modify command to configure vPort table creation and overlay VXLAN analytics.

If you want to enable vPort table creation for an existing Kubernetes connection, use the command:

CLI (network-admin@switch) > k8s-connection-modify cluster-name k8s01 create-vport

To enable overlay VXLAN analytics, issue the command:

CLI (network-admin@switch) > k8s-connection-modify cluster-name k8s01 overlay-vxlan-analytics

Note:

- When you enable overlay-vxlan-analytics, you may need to reboot all the switches in the fabric or restart Netvisor ONE on several nodes in the fabric.

- You cannot enable overlay-vxlan-analytics on more than one Kubernetes connection in a fabric.

- When you enable overlay-vxlan-analytics, Netvisor ONE internally enables inflight-vxlan-analytics. For more information on inflight-vxlan-analytics, see Configuring vFlows with User Defined Fields (UDFs).

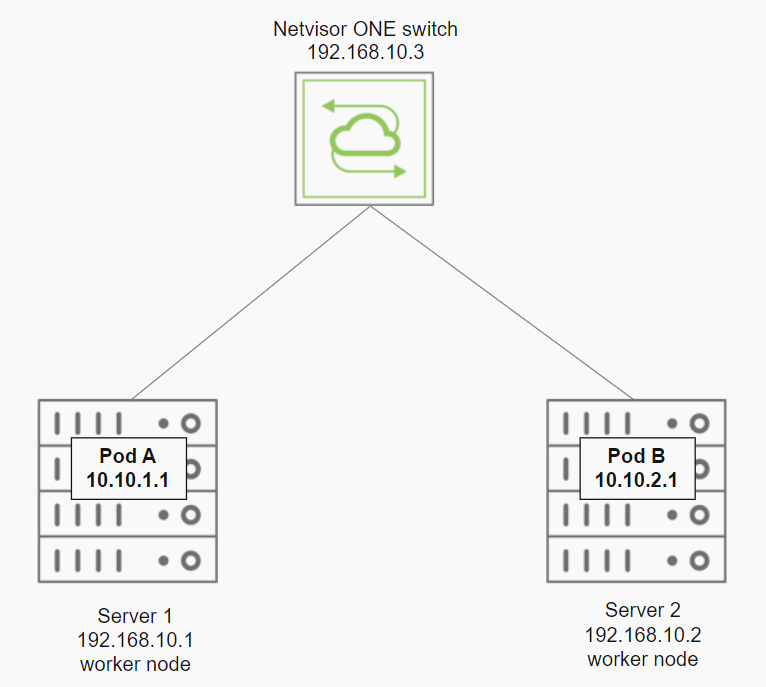

As an example, consider a Kubernetes environment illustrated in the figure below having Flannel as the CNI plugin and host-gw as the backend. The worker nodes server1 and server 2 host Pod A and Pod B respectively.

Figure 14-1: Pod to Pod Kubernetes Traffic Analytics

Configure the connection to the Kubernetes API server and enable vPort table creation by using the commands:

CLI (network-admin@switch) > k8s-connection-create cluster-name pluribus kube-config /root/.kube/config enable

CLI (network-admin@switch) > k8s-connection-modify create-vport

The CNI information can be displayed by using the command:

CLI (network-admin@switch) > k8s-cni-show

|

k8s-cni-show |

Display CNI information. |

|

cluster-name cluster-name-string |

The name of the Kubernetes cluster. |

|

start-time date/time: yyyy-mm-ddTHH:mm:ss |

The start time of statistics collection. |

|

end-time date/time: yyyy-mm-ddTHH:mm:ss |

The end time of statistics collection. |

|

duration duration: #d#h#m#s |

The duration of statistics collection. |

|

interval duration: #d#h#m#s |

The interval between statistic collection. |

|

since-start |

Statistics collected since start time. |

|

older-than duration: #d#h#m#s |

Display statistics older than the specified duration. |

|

within-last duration: #d#h#m#s |

Display statistics within the last specified duration. |

|

cni-plugin cni-plugin-string |

The CNI plugin name. |

|

backend backend-string |

The backend used by the CNI plugin. |

|

udp-port udp-port-number |

The VXLAN destination port. |

|

vxlan 0..16777215 |

The VXLAN ID for the vFlow. |

|

network-type network-type-string |

The network type implemented by the CNI plugin. |

CLI (network-admin@switch) > k8s-cni-show

cluster-name cni-plugin backend network-type

------------ ---------- ------- ------------

pluribus flannel host-gw underlay

For the two Pods running on different worker nodes, the vport-show output is:

CLI (network-admin@switch) > vport-show svc-name pluribus

owner mac vlan ip svc-name hostname entity power portgroup status

------ ----------------- ---- --------- -------- -------- ------ ------- --------------- ------------

switch 88:88:88:bf:5d:be 4090 10.10.2.1 pluribus server2 pod-A Running app:hello-world host,pod,k8s

switch 88:88:88:bf:2e:ac 4090 10.10.1.1 pluribus server1 pod-B Running app:hello-world host,pod,k8s

The vPort table is created statically and does not depend on the traffic that goes through the switch. Therefore the mac and vlan fields are populated by dummy values. The portgroup field displays the Pod label and svc-name field displays the cluster name. The hostname field represents the node where the Pod is running, entity represents the Pod name, and power is the Pod container's state.

Note: The vPort table is created locally on the switch where the Kubernetes connection is configured and will not be shared across other nodes in the fabric.

The connection-show command displays the connection information:

CLI (network-admin@switch) > connection-show

switch vlan src-ip dst-ip dst-port cur-state latency age

------ ---- --------- --------- -------- --------- ------- ---

switch 1 10.10.1.1 10.10.2.1 8080 syn-ack 4.01s 9s

In the case of a Kubernetes environment having Flannel as the CNI plugin with VXLAN backend, you can enable overlay-vxlan-analytics to view the connection statistics for inter-Pod traffic.

Guidelines and Limitations

- Netvisor ONE supports only Flannel and Calico as CNI plugins for Kubernetes visibility through vPort and connection tables.

- Overlay VXLAN anaytics with non-default VNI may not work for Calico CNI plugin.

- For the connection table to be populated, you must enable connection statistics on the switch:

CLI (network-admin@switch) > connection-stats-settings-modify enable

- If you change the CNI configuration, you must reboot the Kubernetes nodes. You must also restart the Kubernetes connection.

- The k8s-connection-create and k8s-connection-modify commands have fabric scope. You can configure up to four Kubernetes connections in a fabric.