Understanding Quality of Service (QoS)

In computer networking, the term Quality of Service (QoS) refers to the use of techniques and technologies to ensure that different applications and/or devices can benefit from appropriate network guarantees to operate optimally, even in the presence of constrained bandwidth and network congestion.

QoS technologies can be used to optimize network performance, prevent service disruption, and provide protection to devices in the network. For example, they can be used to ensure that the traffic of mission-critical applications is prioritized and not disrupted during times of high network congestion.

QoS enables organizations to optimize their overall network utilization by applying different prioritization levels to the traffic of different applications, that is, to different traffic classes.

On the other hand, when QoS is not enabled, a network operates by delivering traffic on a best-effort basis, in other words all traffic is forwarded with the same priority and therefore has an equal chance of being delivered or of being dropped in case of congestion.

When QoS is enabled, traffic can be classified based on a number of criteria (such as certain packet fields or granular hardware-based policies). Once service classes are associated to the packets, they can be prioritized according to policies implemented by the network administrator that take into account the requirements of each traffic class. Some applications, for example, may be latency sensitive, other applications may not be very tolerant to packet drops, etc. Therefore, the network administrator can apply different prioritization policies to different types of traffic to optimize each application or protocol’s behavior. For instance, certain protocols may be very sensitive to the effects of congestion, therefore the network administrator may elect to use QoS techniques for traffic congestion avoidance.

In a nutshell, implementing QoS in a network improves the predictability of the network performance (in terms of speed and traffic latency) and optimizes bandwidth utilization.

In general, the broad term ‘QoS’ refers to a set of techniques and mechanisms that include:

- Port buffering: consists of techniques for the allocation of temporary storage to packets during network congestion (i.e., when packets cannot be immediately forwarded) to avoid drops in the network choke points.

- Port queuing: refers to segregating traffic into different transmission queues based on one or more classification criteria.

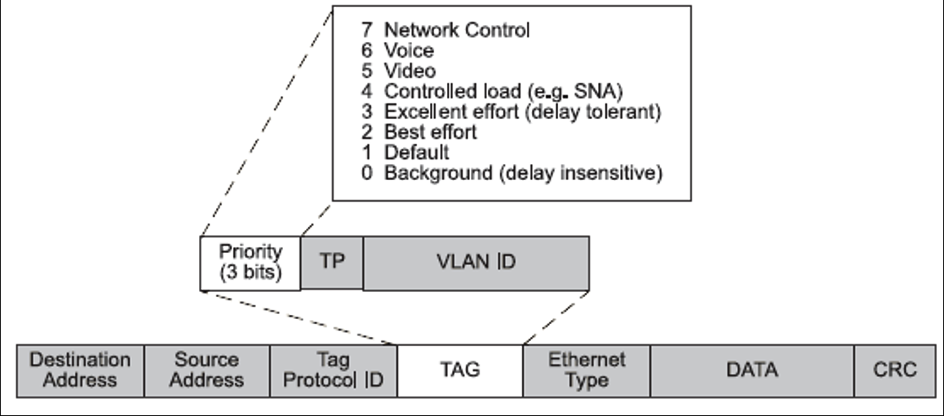

- Traffic classification and marking: is the process of selecting traffic and associating it with a class value. Packets can carry a class or marking directly in their headers: 802.1Q-tagged packets carry an 802.1p Class of Service (CoS) priority field (see figure below), while IP packet headers include a differentiated services code point (DSCP) field. Traffic classification can be derived directly from the packet fields or, when those fields are missing or cannot be trusted, the traffic is (re)marked by the hardware based on some preconfigured policies. A per-port ingress trust attribute determines which classification behavior to apply to incoming packets.

- Traffic scheduling: packet buffers can be divided into multiple queues to which packets get scheduled based on their classification (for example, based on their CoS value).

- Congestion avoidance: consists of various techniques that aim to prevent or minimize downstream traffic congestion.

- Traffic policing (or rate limiting): is a technique to limit the speed of a flow by dropping the packets that exceed a configured threshold (in addition, re-marking can also be used to conditionally drop traffic in case of downstream congestion).

- Traffic shaping: is a technique to limit the speed of a flow by queuing up the packets that exceed a configured threshold. This technology is more drop-averse and hence TCP friendly (compared to policing) but, when queuing up traffic, the latency of the buffered packets may be significantly increased (which is not always desirable).

Figure 13-1: IEEE 802.1Q Header with Class of Service/Priority Field

QoS can logically be applied in ingress or in egress to a switch. The switch forwarding engine can also apply hardware policies to the traffic, for example to remark or police the traffic.

Layer 2 QoS revolves around 802.3 and 802.1Q technologies such as 802.1p class of service (CoS) and packet flow control. Hence, it can be applied to both IP and non-IP traffic.

Layer 3 QoS applies to IP traffic and can therefore leverage the differentiated services code point (DSCP) field (defined in RFC 2474), which for IPv4 replaced the outdated Type of Service (TOS) field (defined in RFC 791).

QoS can be applied to the data plane as well as to the control plane traffic. In the former case, it is used to optimize the traffic flow, the resource allocation, and the application behavior. It can also be used to protect certain classes of traffic, devices, or users.

When the device to be protected (for example, from denial-of-service attacks or network malfunctions) is the control plane CPU of a switch, then QoS can be very useful to ensure network stability and security (because QoS-based optimizations can be applied to benefit the CPU’s computing and memory resources and to save CPU cycles).

Regarding QoS applied to control plane protection, Arista Network has implemented the Control Plane Traffic Protection (CPTP) feature. For more information about it, refer to the Configuring Control Plane Traffic Protection (CPTP) section of the Configuring Network Security chapter.