Understanding Virtual Networks (vNETs)

Virtual Networks Overview

Arista Networks supports various network virtualization capabilities as part of a highly flexible approach to software-defined networking (SDN), called Unified Cloud Fabric (previously known as Adaptive Cloud Fabric).

Arista’s fabric addresses all the most common network design requirements, including scalability, redundancy, predictable growth capability, fast convergence in case of a failure event, etc. Such requirements also include multi-tenancy support.

Therefore, in the data center, by leveraging NetVisor OS’s virtualization features, network designers can implement a variety of multi-tenancy models such as Infrastructure as a Service (IaaS) or Network as a Service (NaaS).

To support multiple tenants, the fabric’s data plane segmentation technologies include standard features (such as VLANs) as well as advanced virtualization features such as VXLAN and distributed VRFs, to be able to deploy an open, interoperable, high-capacity and high-scale multi-tenant network. (Refer to the Configuring VXLAN chapter for more details on VXLAN and distributed VRFs).

In addition to data plane segmentation, fabric virtualization also comprises the capability of separating tenants into isolated management domains. In general network parlance, this capability is handled by a virtual POD (or vPOD), which is a logical construct in a shared infrastructure where a tenant can only see and use resources that are allocated to that particular tenant. Arista calls this capability Virtual Networks (vNETs).

Low-end platforms that have limited memory cannot host multiple vNETs. In such cases, you can host the vNETs on a separate server (such as ESXi servers) to provide multi-tenancy and vNET based management by using virtual NetVisor.

Virtual NetVisor or vNV is a virtual machine running NetVisor OS to provide vNET capabilities to a unified cloud fabric, even with low end platforms which are not able to run multiple vNET Managers on it. For details, see the Configuring vCenter Features chapter of this guide or the Virtual NetVisor Deployment Guide.

By joining the Unified Cloud Fabric that is composed of ONVL switches, vNV provides more compute resources to run vNET Manager containers, in standalone mode or in HA pair, and offers multitenancy capability in an existing fabric.

Two vNV instances can share the same vNET Manager interface to provide a fully redundant management plane for each tenant created. A pair of vNV instances can support up to 32 vNETs in HA mode and 64 vNETs in standalone mode.

The vNV workflow includes creating a virtual machine (VM) from a template on a ESXi host, provisioning network adaptors (vNICs) and port-groups on the vSwitches, and provisioning fabric and data network on NetVisor OS. Each such tenant can view and manage its network slice by logging into the vNET manager zone (described in later sections).

About vNETs

A vNET is an abstract control plane resource that is implemented globally across the fabric to identify a tenant’s domain. By using vNETs, you can segment a physical fabric into many logical domains, each with separate resources, network services, and Quality of Service (QoS) guarantees. vNETs therefore allow the network administrator to completely separate and manage the provisioning of multiple tenants within the network.

In simple terms, vNETs are separate resource management spaces and can operate in the dataplane as well as in the control plane. That is, a physical network can be divided into multiple virtual networks to manage the resources of multiple customers/organizations (multi-tenancy).

Each vNET has a single point of management. The fabric/network administrator can create vNETs and assign ownership of each vNET to the vNET administrators with responsibility for managing those resources (for example, manage the VLANs, vLEs, vPGs, vFlows associated with that vNET). For details about these resources, refer to the respective sections in the Configuration Guide (see Related Documentation below).

About vNET Architecture

By creating a vNET in the Unified Cloud Fabric, the network resources can be partitioned and dedicated to a single tenant with fully delegated management capabilities. This partition of the fabric can be configured either on one switch, a cluster of two switches, or the entire fabric by leveraging the “scope” parameter in the CLI command, depending on where you want the vNET to deliver a reserved service to a tenant.

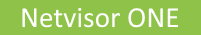

The figure 18-1 shows how a multi-tenant leaf-spine design can be achieved with a Arista’s Unified Cloud Fabric, the details of the components are explained below.

Fig 18-1: Multi-tenant Fabric Design Components

Further, vNETs are very flexible and can be used to create complex network architectures. In another example, an Arista Networks switch, or a fabric of switches, are used to create multiple tenant environments (Fig 18-2). In this diagram, there are three vNETs (yellow, blue, and red), each one with a management interface and a data interface. Each vNET is assigned an IP address pool used for DHCP assignment of IP addresses to each node, server, or OS component.

With vNETs, you can have segmentation (logical network slices) of a physical infrastructure at data plane, control plane, and management plane with a dedicated management interface (see Fig 18-2).

Fig 18-2: A vNET Infrastructure with Multi-site Fabrics

Underlying each vNET is the vNET manager (see below for the description of a vNET Manager). Each vNET manager runs in a zone. When services are created for a vNET, they occupy the same zone on the switch. This is called a shared service and it is the default when creating services. However, each zone can only support a single instance of a service. If a second service instance is needed for a vNET, then it needs to occupy a separate zone. This is called a dedicated service. In most cases, you can create services as shared resources unless you specifically want to create a dedicated service.

For details of each component, see the sections below.

Default vNET (Global vNET)

The Default or Global vNET is the default partition on a switch that is used when the switch has not been configured with additional vNETs. That is, when a fabric is created, a vNET is also automatically created and is named fabric-name-global. This default partition owns all the resources on a NetVisor switch such as:

- Physical ports

- Default VLAN space

- Default Routing table/domain (if enabled on the switch)

- vRouter functions

- VTEP function

- VLE function

- VPG function

- vFlows

- Any L2/L3 entries learned through static and dynamic protocols

- Any additional containers created

Later, when the user specific tenant vNETs are created, the network administrator can allocate resources from the default vNET to those tenant vNETs.

The default vNET is not configured by the fabric/network administrator, it is a partition created when the switch becomes part of a fabric. Also, there is no vNET Manager container associated to the default vNET (see below for the description of a vNET Manager).

Note: The default vNET uses the naming convention: <fabric-name>-global.

Tenant vNET (referred as just vNET)

A Tenant vNET is a partition that carves out the following software and the hardware resources from the switch. This ensures the tenant to use and manage their reserved resources.

- VLAN IDs

- MAC addresses

- VRouter or VRFs

- Remote VTEPs (Virtual Tunnel Endpoints)

- Physical ports

You can create a vNET with different scopes such as:

- Scope local: The vNET is locally created on the switch and cannot be used by any other switch in the same fabric.

- Scope cluster: The vNET is created on a cluster pair that will share the same Layer 2 domain.

- Scope fabric: The vNET partition is created on all switches in the same fabric.

After a vNET is created, the network or fabric administrator can allocate resources to it when new objects (such as VLANs, vRouters, etc.) are created in the fabric.

Although vNETs could be compared to Virtual Routing and Forwarding (VRFs), the vNETs have more capabilities compared to the VRF capabilities. The vNETs not only provide an isolated Layer 3 domain, but also offers the capability to have VLAN and MAC overlapping between vNETs. In other words, vNET is a complete isolation of data plane and control plane along with the management plane if there is a vNET Manager associated to it (see below for the description of a vNET Manager).

Each vNET has a single point of management. As the network/fabric administrator, you can create vNETs and assign ownership of each vNET to individuals with responsibility for managing those resources and with separate usernames and passwords for each vNET manager.

NetVisor OS support two types of vNETs: Public vNET and Private vNET.

Public vNET

A Public vNET is the normal vNET, which uses the default 4K VLAN space available on a switch. In this case, the default vNET and any other vNET created shares the range of 0-4095 VLAN IDs available on the switch with no option to have overlapping VLAN IDs across vNETs.

By default, a vNET is created as a Public vNET, although with overlay networks and multitenancy requirements, you can use Private vNETs almost exclusively.

Private vNET

A Private vNET is using an independent 4K VLAN space, where the vNET administrator can create any VLAN ID he prefers regardless of whether that ID is used in the default vNET or in another tenant vNET. That is, private vNET supports VLAN re-use. NetVisor OS supports this functionality by providing an internal VLAN mapping in the hardware tables.

As the hardware architecture on Open Networking platforms does not offer unlimited resources, the hardware tables between the default vNET and other created vNETs have to be shared. Arista provides a way to limit the number of VLANs that can be created in a Private vNET to avoid a situation where a tenant creating too many VLANs prevents other tenants from creating their VLANs as there are no hardware resources available on the switches.

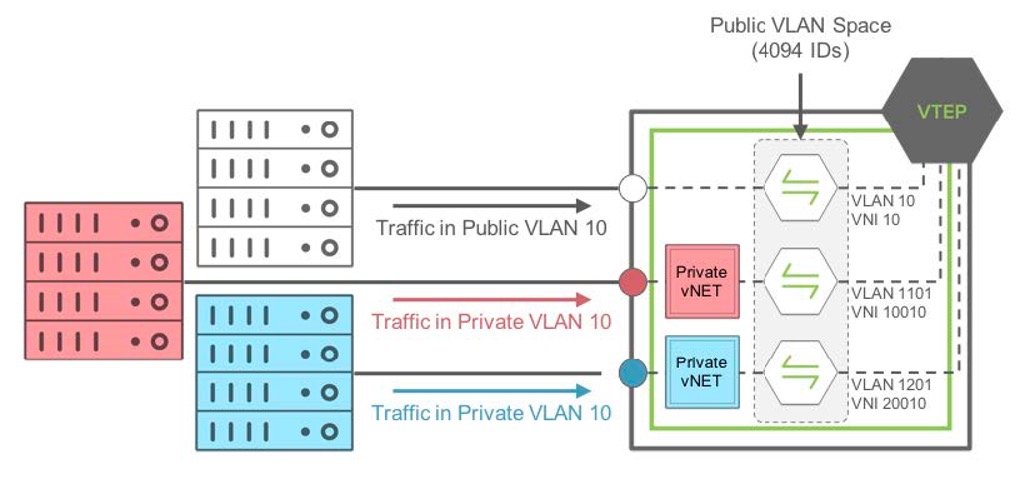

To create an independent 4K VLAN ID space for each tenant, a mapping between the two types of VLANs (Public VLANs and Private VLANs) is required and is called vNET VLANs.

Note: Private vNETs are extensively used on Ericsson’s NRU-xx platforms as they support multiple containers.

vNET VLANs

A vNET VLAN consists of:

- Public VLAN - A Public VLAN is a VLAN that uses the default VLAN ID space on a switch. When a network/fabric administrator creates a VLAN on any NetVisor switch, which has only the default vNET (no other vNET is created), it takes the VLAN IDs in the Public VLAN space (0-4095 range).

- Private VLAN - A Private VLAN is a user-defined VLAN ID in a private space, that has nothing to do with the actual VLAN ID (in the public space) used on the switch to learn Layer 2 entries for this bridge domain. When the switch receives a frame with an 802.1q tag using the Private VLAN ID, it will translate this VLAN ID to an internal VLAN ID in the 4K Public VLAN space.

For a Private VLAN to be transported over a VXLAN infrastructure, it requires a VNI to be associated to it so that it can be identified as unique in the shared (or public) Layer 2 domain.

For details, see the Configuring VXLAN and Configuring Advanced Layer 2 Transport Services chapters of this Configuration Guide.

The following diagram (Figure 18-3) depicts the relationship between Public and Private VLANs in a Private vNET implementation:

Figure 18-3 Private VLAN to Public VLAN Mapping in Private vNETs

In this example (Figure 18-3), the traffic coming on a managed port from the red private vNET, using private VLAN ID 10 is mapped internally to the public VLAN ID 1101. Similarly, traffic coming on a managed port in the blue private vNET, using private VLAN ID 10 as well is mapped internally to the public VLAN ID 1201.

The mapping is done automatically in NetVisor OS by defining a pool of usable public VLAN IDs for private vNETs.

Note: Traffic from the red private VLAN 10 and blue private VLAN 10 are NOT bridged as they both are not in the same bridge domain internally. Also, traffic coming on a port on the default vNET using VLAN 10 (here, public VLAN 10), is mapped internally to the same public VLAN 10.

VNET Ports

Apart from the VLAN and MAC table isolation mentioned above, a complete isolation for each tenant at the physical port level is required. To achieve this isolation at the access ports level, NetVisor OS introduces different types of ports (explained below) to address different scenarios in a shared network.

Managed Port

A managed port is an access port that is associated with only one tenant (see Fig-18-1). That is, this port is dedicated to a vNET and cannot be shared with other vNETs configured on the same switch. The managed port is typically used to connect and isolate resources dedicated to a particular tenant such as servers or any other resource that is not an active Layer 2 node.

Note: As an access port, a managed port does not support spanning tree (STP). In this case, the Layer 2 loop prevention mechanism is implemented through another feature called Block-Loops.

A managed port can be configured as an "access port” or a “Dot1q Trunk port” when the VLANs carried on that port are part of the same private vNET. It transports only private VLANs belonging to the same vNET. It also supports link aggregation (IEEE standard 802.3ad) on a single switch or with two different switches that are part of the same cluster, called virtual LAG (vLAG) with Arista switches.

Shared Port

As the name suggests, a shared port (Fig-18-1) is an access port that can serve different tenants on the same port where a shared resource is connected. That is, this port is not dedicated to a vNET and can be shared with other vNETs configured on the same switch. It is typically used to connect shared resources like gateways, firewalls, or even hypervisors hosting several VM servers from different tenants.

A Shared port is always configured as a “Dot1q Trunk port” transporting only public VLANs from the same vNET or different vNETs on the same port. It also supports link aggregation (IEEE standard 802.3ad) on a single switch or with two different switches that are part of the same cluster, called Virtual LAG (vLAG) with Arista switches.

Underlay Port

In a vNET design, the underlay network topology is shared by all vNETs configured in the fabric. To avoid dedicating one underlay port (per tenant) for connecting leaf switches to spine switches, NetVisor OS cloud fabric has abstracted the underlay ports from the vNET so as to leave their management to the network administrator.

Underlay ports are the ports that interconnect spine and leaf switches or two switches that are part of the same cluster (see Fig-18-1). These ports are not configurable for the private vNETs, but are configured automatically based on the underlay design defined by the network administrator.

vNET Manager

A vNET Manager is a container or portal that is created on a fabric node and is associated to a vNET to provide a dedicated management portal for the specified vNET. A vNET Manager is a completely stateless object, but it is deployed in high availability (HA) mode to ensure the tenant never loses management access to the vNET.

A vNET Manager in high availability mode have two states:

- Active vNET Manager: preferred container to manage resources allocated to a vNET. This container is used by the vNET Administrator to configure resources using the CLI or API allocated by the network administrator to this vNET.

- Standby vNET Manager: container in standby mode, which shares the same IP address with the Active vNET Manager container to offer high availability when the Active container fails or becomes inaccessible.

Guidelines for vNET Manager

Consider the following guidelines while creating a vNET Manager:

- A vNET Manager is not required for creating a vNET, however, the vNET manager is created only to provide a dedicated management portal to the tenant.

- A vNET Manager container is an object local to the switch where it is created. In other words when the network Administrator creates a vNET with a fabric scope, the vNET Manager associated to this vNET is created only on the switch where the command is instantiated.

- A vNET Manager can be created on any switch in a fabric only if local resources such as compute, memory, and storage are available.

vNET Router

A vNET vRouter is a network service container that provides Layer 3 services with dynamic routing control plane to a vNET. A vNET can be created in one of the following ways:

- Without a vRouter container (Layer 2-only vNET)

- With one single vRouter (Layer 3 vNET).

- With more than one vRouter (Multi-VRF model in a vNET)

The first vRouter associated to a vNET builds the vNET (default) routing table. For some designs, a vNET can have more than one vRouter to support multiple routing tables. This can be achieved by creating additional vRouter containers associated to the same vNET. Unlike in a traditional multi-VRF router, multiple vRouter containers ensures complete isolation between routing instances within the same vNET, by maintaining isolation of resources between routing domains. Later, each vRouter can be extended to support multi-VRF to help maximize the scale of VRFs without depending on resource-consuming vRouter containers.

Note: A vRouter in any vNET supports the same feature set that a vRouter created in the default vNET supports.

vNET Administrator

A vNET administrator (referred to as vNET-admin) account is created when a vNET Manager container is created. This account has full read/write access to its associated vNET. Typically, when a VNET is created, an associated VNET manager zone is created (if you specify the create-vnet-mgr parameter) to host all vNET related services as well as to provide administrative access to the vNET to manage these resources. Location of the container zone is typically on the same switch on which the VNET is created and consumes space on the device.

A vNET-admin account can access only its own vNET Manager and can see resources belonging to its vNET only. The vNET-admin is responsible for the creation/deletion of the following resources in its vNET:

- vNET VLANs/VNIs

- LAGs/vLAGs for Managed ports associated to the vNET

- vNET vRouters

- VXLAN Tunnels for the vNET

- L3 routing protocols for the vNET

Using the vNET administration credentials, the vNET admin can use Secure Shell (SSH) to connect to the vNET manager and access a subset of the NetVisor OS CLI commands to manage a specific vNET. This way, multiple tenants can share a fabric with each managing a vNET with security, traffic, and resource protection from other vNETs.

Staring with NetVisor OS version 7.0.0, the vNET admin can use REST API to access, configure, and manage the vNET resources using the Arista NetVisor UNUM Management Platform.

Network Administrator (Fabric Administrator)

The Network administrator (referred to as network-admin) is the root account equivalent on a Linux distribution. It has all the privileges to create, modify, or delete any resources created on any fabric node. Besides having the same privileges that a vNET-admin has, the network-admin can also manage the following fabric attributes:

- Fabric nodes

- Clusters

- vNETs

- All Ports

- VTEPs

- vFlows (Security/QoS)

- Software upgrades

Note: The network-admin can create separate user names and passwords for each vNET manager.