EVPN - EVPN ARP Cache

VXLAN Ethernet VPN (EVPN)

Note: The VXLAN EVPN Border Gateway functions are supported only on the Dell S5200 Series switches.

Ethernet VPN (EVPN) is a standard technology created to overcome some of the limitations of a popular MPLS-based technology called Virtual Private LAN Service (VPLS), as specified in the respective IETF RFCs for MAN/WAN use. For example, in data center deployments, it became imperative to address certain VPLS limitations in areas such as multi-homing and redundancy, provisioning simplicity, flow-based load balancing, and multipathing.

Hence, as an evolution of VPLS, EVPN was born as a multi-protocol (MP) BGP- and MPLS-based solution in RFC 7432 (later updated by RFC 8584) to implement the requirements specified in RFC 7209.

The initial implementation of EVPN leveraged the benefits of an MPLS Label Switched Path (LSP) infrastructure, such as fast reroute, resiliency, etc. Alternatively, the EVPN RFC includes support for an IP or IP/GRE (Generic Routing Encapsulation) tunneling infrastructure.

As a further evolution, in RFC 8365, EVPN was expanded to support various other encapsulations, including a VXLAN-based transport (called overlay encapsulation type 8).

By that time, VXLAN became the prevalent transport in the data center to implement virtual Layer 2 bridged connectivity between fabric nodes. So EVPN could then be used as an MP BGP-based control plane for VXLAN, with support for specialized Network Layer Reachability Information (NLRI) to communicate both Layer 2 and Layer 3 information for forwarding and tunneling purposes.

In EVPN parlance, VXLAN is a Network Virtualization Overlay (NVO) data plane encapsulation solution with its identifiers, the VNIs (also called NVO instance identifiers). VNIs can be mapped one-to-one or many-to-one to EVPN instances (EVIs). VNIs can be globally unique identifiers (in a typical use case). Still, the EVPN RFC also includes support when used as locally significant values (to mimic MPLS labels).

In a VXLAN network using EVPN, a VTEP node is called a Network Virtualization Edge (NVE) or Provider Edge (PE) node. An NVE node may be a Top-of-Rack (ToR) switch or a virtual machine, but it can also be a border node.

Starting from NetVisor OS release 6.1.0, Arista Networks implemented the EVPN technology to interconnect multiple fabric pods and extend the VXLAN overlay across them, supporting both IPv4 and IPv6. EVPN’s powerful control plane enables network architects to build larger-scale multi-pod designs, where each pod can comprise up to 32 leaf nodes. It also allows an Arista pod to inter-operate with pods using third-party vendors’ nodes.

In this implementation, VTEP objects, subnets, VRFs are naturally extended to communicate across pods. To do that, NetVisor OS leverages EVPN in a pragmatic, integrated, and standards-compatible way to implement a BGP-based control plane that enables multi-hop VXLAN-based forwarding across pods using several key fabric enhancements, described in the following.

About EVPN Border Gateways

Note: As of NetVisor OS release 6.1.0, only leaf nodes can be designated as border gateways.

In Arista’s fully interoperable implementation, EVPN’s message exchange is supported only on specially designated border nodes, deployed individually or in redundant pairs (i.e., in clusters running VRRP).

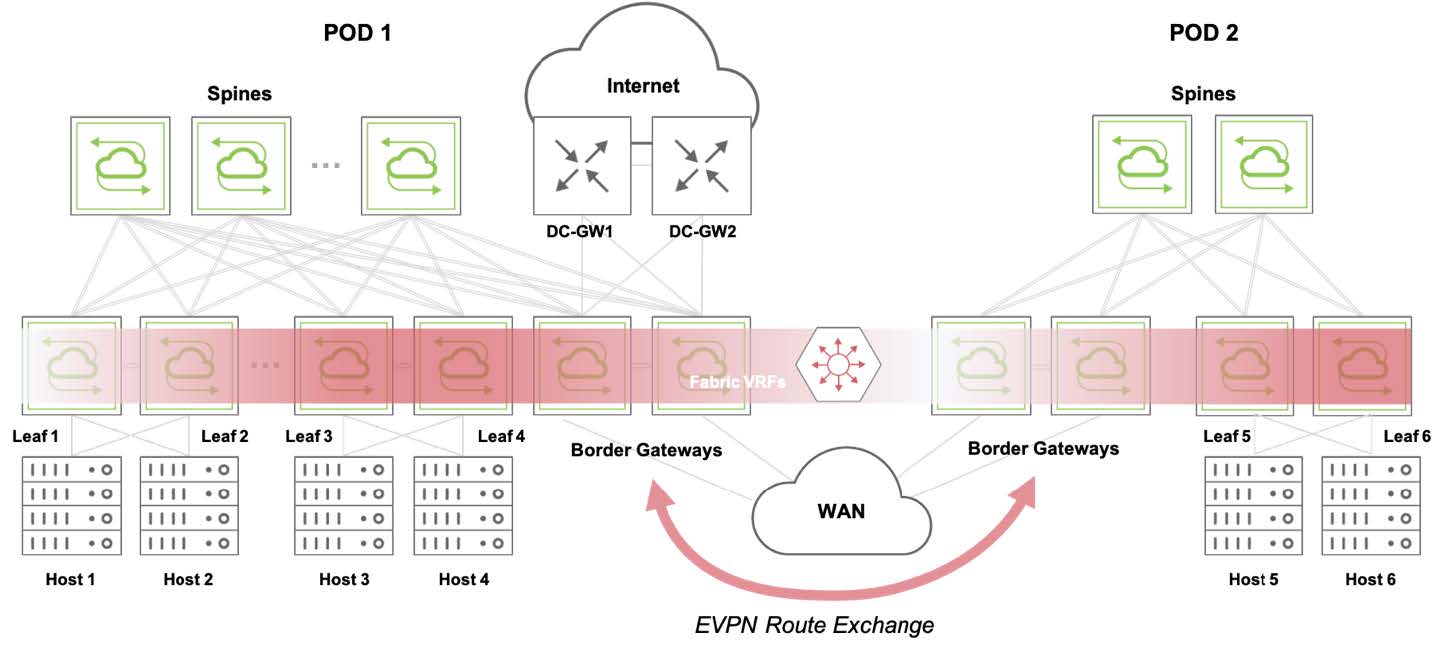

Such border nodes are commonly called Border Gateways (BGW), as they implement the EVPN Border Gateway functions (which we will describe in detail in the following). In essence, they are in charge of exchanging messages with external EVPN-capable nodes (Arista or other vendors’ devices) and of propagating and translating external EVPN routes, where needed, within each pod, as depicted in the figure below.

Border Gateways and EVPN Route Exchange

Layer 3 EVPN - EVPN ARP Cache

There are features and functions used in Arista NetVisor UNUM and Insight Analytics that are common throughout the user interface (UI). Please refer to the Common Functions section for more information on the use of these functions and features.

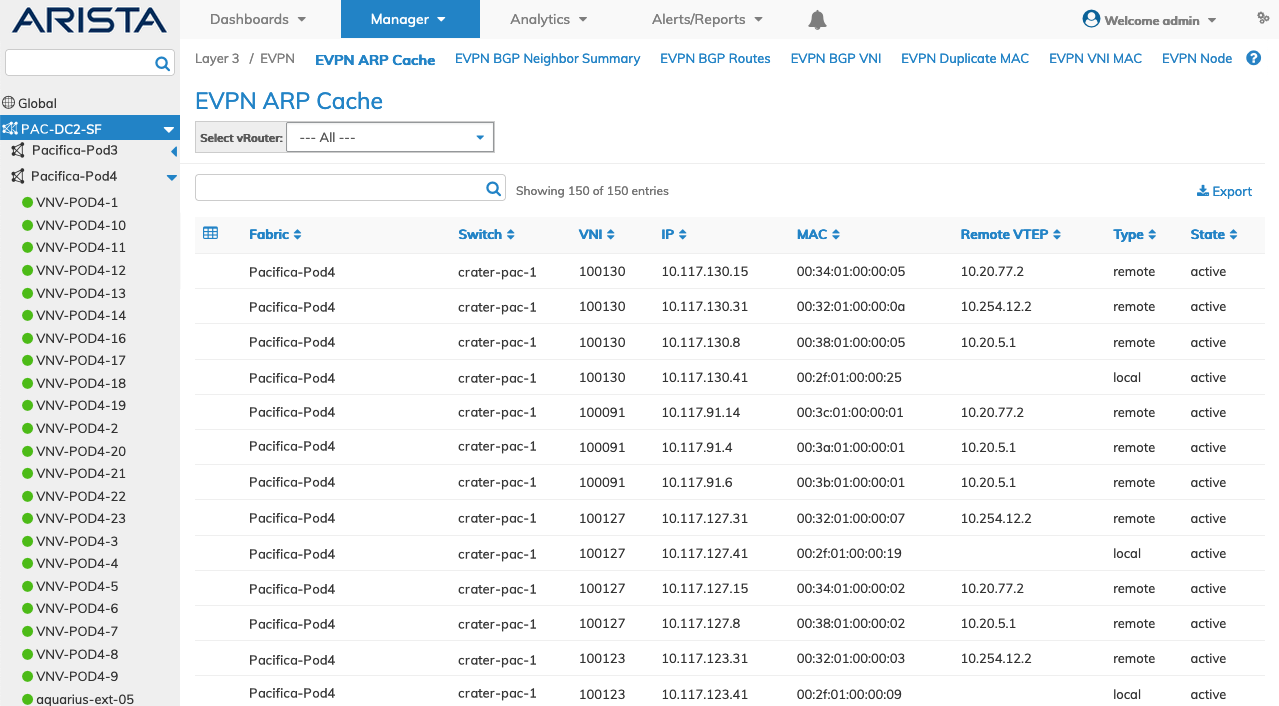

Selecting Manager → Layer 3 → EVPN ARP Cache displays the ARP Cache dashboard.

Select the applicable Fabric from the left-hand navigation bar and the dashboard updates showing all EVPN ARP Cache entries from all switches within the selected Fabric.

Note: If no entries exist a "No Data Exists" message is displayed. You must first configure an entry on a switch. Prerequisite settings and configuration may be required.

The dashboard displays a list of existing EVPN ARP Cache entries by Fabric. Additional parameters include: Switch, VNI, IP, MAC, Remote VTEP, Type, and State.

Manager EVPN ARP Cache Fabric Dashboard

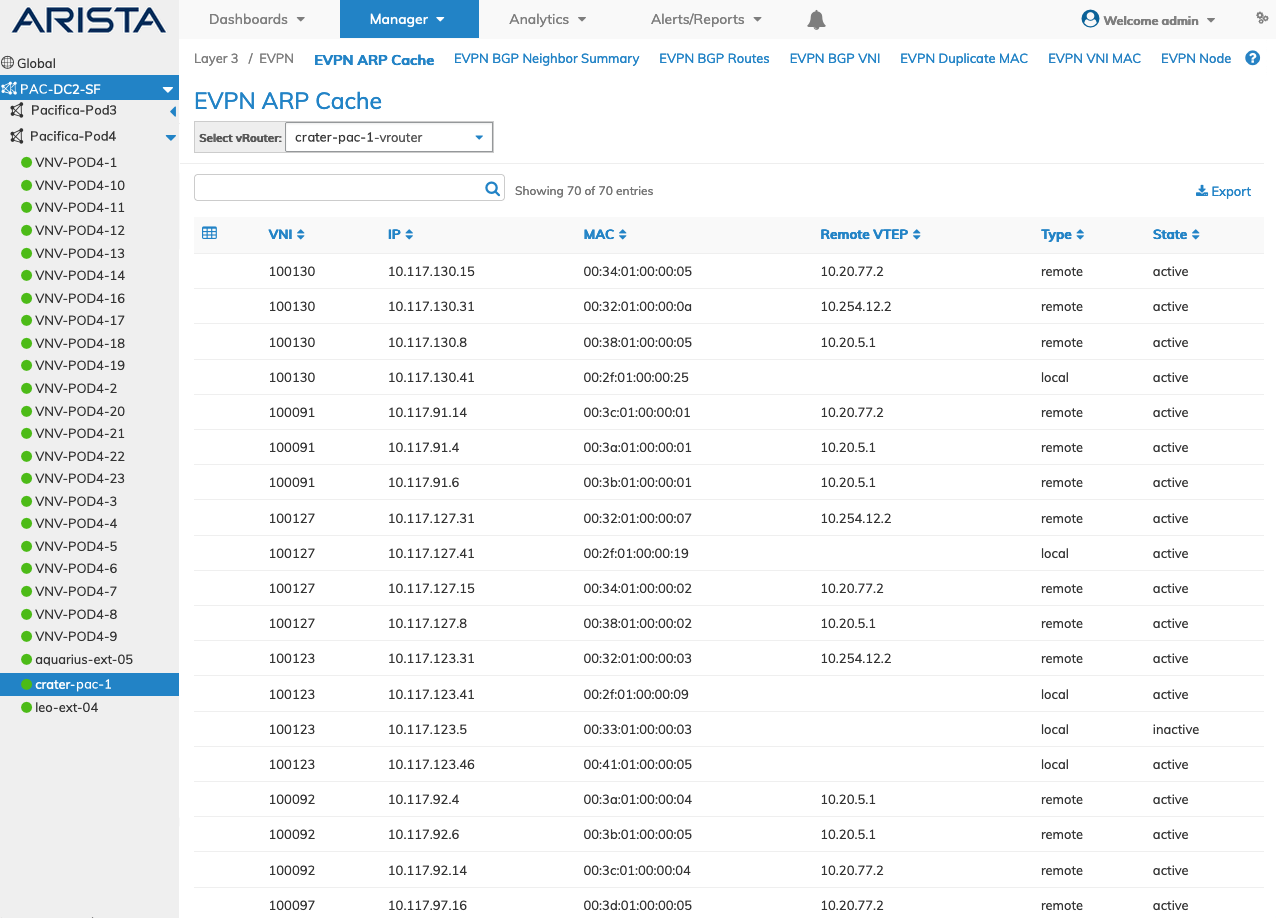

Select the applicable switch from the LHN (Left-Hand Navigation) and make a selection from the vRouter drop-down menu.

The dashboard updates automatically with EVPN ARP Cache settings for that switch.

The dashboard displays a list of existing EVPN ARP Cache entries by VNI. Additional parameters include: IP, MAC, Remote VTEP, Type, and State.

Manager EVPN ARP Cache Switch Dashboard